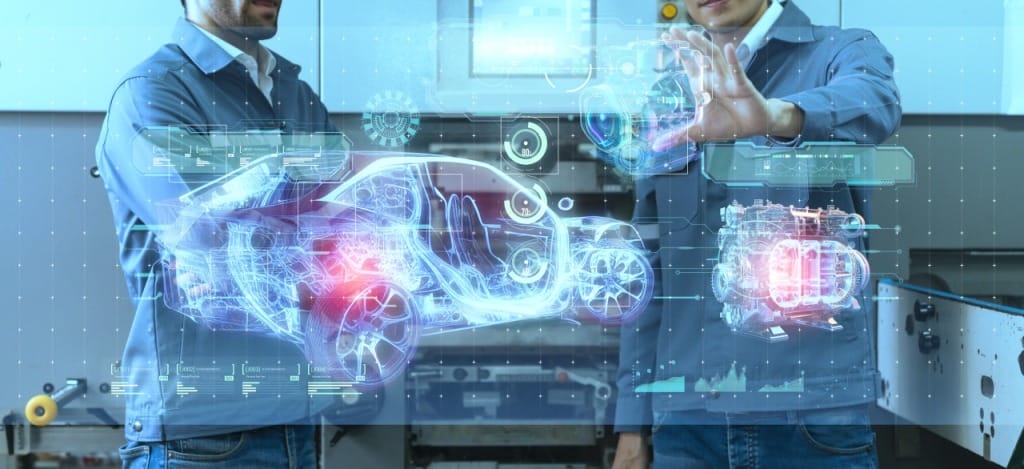

Vision for Industry 6.0: The Convergence of AI, Robotics and Human Ingenuity

Industry 6.0 is not just a technological evolution; it’s a paradigm shift that will redefine the future of manufacturing. It’s about creating a harmonious blend of AI, robotics, and human ingenuity to achieve unprecedented levels of efficiency, personalization, and sustainability.

Vision for Industry 6.0: The Convergence of AI, Robotics and Human Ingenuity Read More »